TL; DR: AI Incident Database encourages people to report AI harm events to help produce safer AI systems and digital ecosystems. The project manages a database that tracks and records AI-related incidents to inform developers and researchers with data to help mitigate and avoid adverse outcomes. We spoke with Sean McGregor, Director with the Digital Safety Research Institutes of the UL Research Institutes and originator of the AI Incident Database, about the project’s mission, AI’s future, and the importance of reporting harm events.

“There are lessons in every failure we should be learning from,” said Sean McGregor, Director with the Digital Safety Research Institutes of the UL Research Institutes and originator of the AI Incident Database. AI presents a universe of possibilities, with much of that being good. But it is still an emerging technology with much ground to cover in testing and maturity.

According to YouGov, 54% of Americans in a survey describe their feelings about AI as “cautious”, while 22% of those polled used the word “scared” to describe their feelings toward the technology. These feelings are warranted, as AI is still in uncharted waters.

AI is new and remains somewhat unknown to mainstream audiences. News of AI harm incidents can make anyone proceed with caution. For AI to have a safe and certain future, society must learn from its mistakes and establish proper guardrails.

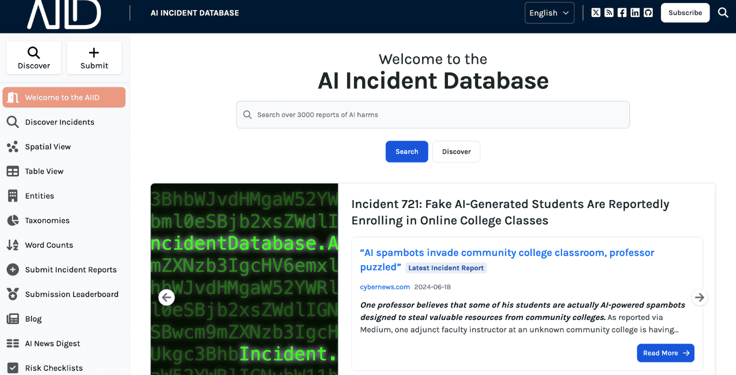

AI Incident Database is a project that seeks to promote the safer use of AI systems. Like the airline industry, which records and learns from crash incidents, the AI Incident Database has created a database for reporting harmful AI events.

“It’s a powerful modality to ensuring that when someone is harmed and an AI system is implicated, we can make it so that it doesn’t happen again. We can engineer a safer world in the presence of AI systems,” said Sean.

Gathering these reports can help identify vulnerabilities, inform responses, and educate shareholders in their AI adoption. AI Incident Database’s project enables users to report harm events to promote AI harm reduction.

A Database for Archiving AI Harm Events

Prior to founding the AI Incident Database, Sean researched solutions to wildfire suppression policy with reinforcement learning. The research project focused on the factors involved in deciding when to suppress a wildfire and when to let it burn.

“I started this research project in 2010 and quickly concluded that it is incredibly difficult to make quote-unquote safe decisions. Very often, the definition of safety with respect to these systems is dependent on perspective,” said Sean.

Studying the different interests in wildfire suppression policy led him to the AI safety space. Shortly thereafter, Sean helped structure IBM Watson AI XPRIZE, a global competition to promote AI for good. His role was to be the skeptic who examined what negative impacts could arise in pursuing good.

“That helped inspire the start of the AI Incident Database because we needed to figure out what to worry about. Having a collection of the things that have happened in the world that we don’t want to incentivize to happen again was very useful,” said Sean.

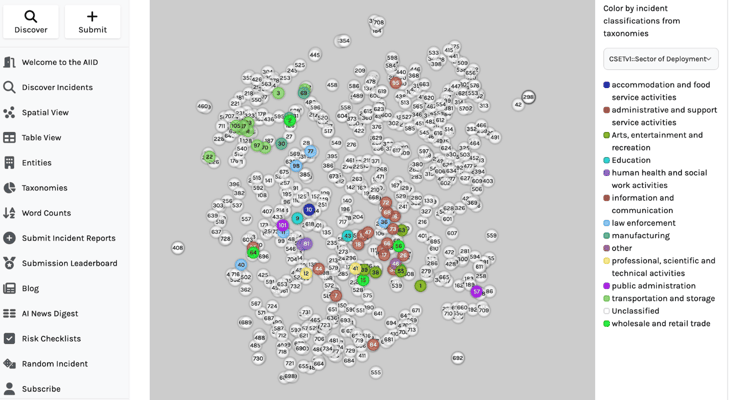

AI Incident Database uses its report database to track and record real-world AI harm events to create a safer world in the presence of AI. Its repository informs researchers and developers about the negative impacts of AI so they can mitigate or avoid bad outcomes.

As more AI solutions emerge, companies will need to understand AI incidents to create safer products and protect end-users and their teams from harmful events. This is why transparency is important. AI Incident Database seeks to make that reality with its database.

Reducing AI Harm is Everyone’s Responsibility

Sean told us that the responsibility for AI safety doesn’t rest on any individual party. It’s everyone’s responsibility.

“Everyone is playing a role and must play a role in making a safe digital ecosystem and a particularly safe information ecosystem since we’re all participants in it. We’re all elevating or not elevating things,” said Sean.

We all participate in online discourse and AI interactions. AI has evolved at such a high rate that today, users can copy and paste synthetic communication and responses to everything. This could lead to harmful effects and doubts about real-world events and communication.

For example, with deepfakes, the challenge is two-fold. Deepfake technology can mislead people about reality and spread misinformation. But it can also make users lose trust in what they see, even if the evidence is factual and genuine.

“We are building a lot of the systems necessary to address that. So that you can’t have what in the security world is a simple attack of false identities that are just printed en masse and destroying the ability to communicate with people,” said Sean.

Sean said the release of ChatGPT helped people recognize that they have to work together to address these problems. AI’s future is largely unforeseeable. So companies, developers, and policymakers will have to collaborate with proactive measures, instead of waiting and seeing.

“We need to have a lot more technologists in government — people who understand the space and can figure out what the government response should be here. And that’s a generational task and need,” said Sean.

Responsible AI is Possible With Education and Awareness

The journey to responsible AI is possible. But it starts with education and awareness at every stage, from primary schools to enterprises.

“The most important thing we can do is likely education and reducing the guild-like boundaries between policy and engineering technology, where neither group knows how to work with the other,” said Sean.

AI is exacerbating many existing challenges, including cybercrime. To solve these issues, we must team up cross-functionally to address them. Users will also need help identifying and determining AI content, whether through tools or educational programs.

Sean told us AI systems are something everyone is experiencing in various forms — chatbots, prompting, coding companions — and it will only continue to become more so. He doesn’t view anything AI-related as small or insignificant because AI impacts billions of people.

“So I would encourage everyone to report whenever an AI system has harmed them so that can result in better AI systems being produced and make the whole world better off,” said Sean.